Explainable AI with Profium

Given the interest to use AI tools to provide users with recommendations or to allow decisions made based on suggestions by AI tools, companies may have to comply with regulations to explain why, for example, an application was or was not approved. Such an application might impact someone’s credit rating or claim application approval.

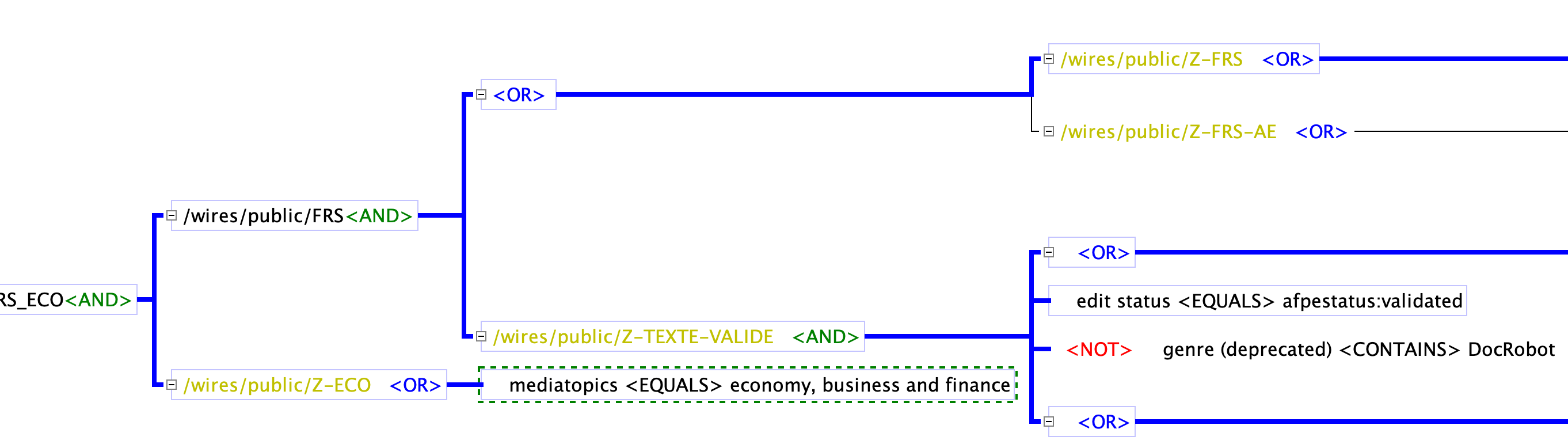

Profium’s rule based AI engine provides the explanation why a recommendation was made or why an application wasn’t approved. The explanation is provided visually and can be understood by human users following a logical decision tree rendered to the screen. This image can be stored for future reference to provide the explanation for later audit or inquiry. You can see such image here with blue rendition of the path which was considered true for a given rule.

We at Profium are exploring if a spoken output using natural language constructs would add value for some user groups. For example, for those, who wish to understand an AI decision over a phone discussion.